This post is about the resources required to handle some network users during a live cryptocurrency decentralized exchange test run with genuine users in the wild. The machine operator goes by the discord name cipi & has contributed some monitoring screenshots on network bandwidth, cpu loads & number of connected clients to the SPV infrastructure. To try out some komodo smart chain technology with electrum server, read the tutorial and fire up your own docker image with the blockchain dev kit.

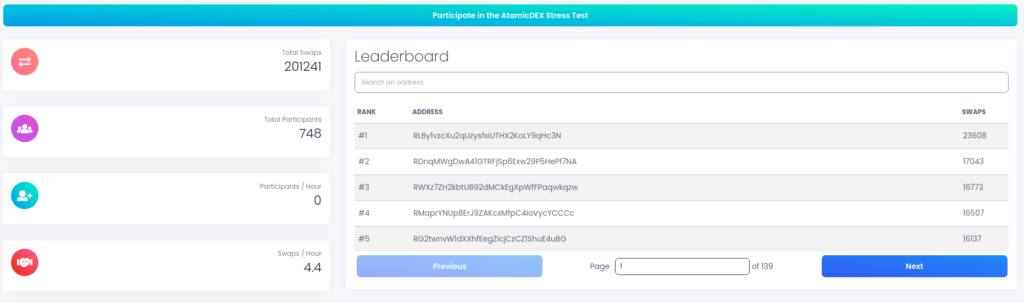

The stress test event took place on December 12 & 13, 2020. 53 unique addresses achieved more than 1000 swaps each, with the top 5 addresses combining for 90,000 cross chain atomic swaps. The total number of atomic swaps was >200,000 in one weekend.

Cipi has stated in discord that the Electrumx server infrastructure used dedicated servers. The clients connected to 3 separate servers. The servers were hosting Bitcoin, Litecoin, Komodo, and the parity Ethereum & ERC20 stuff.

AMD EPYC 7502P, 384 GB RAM, 3x NVME SSDs in a RAID 0 configuration.

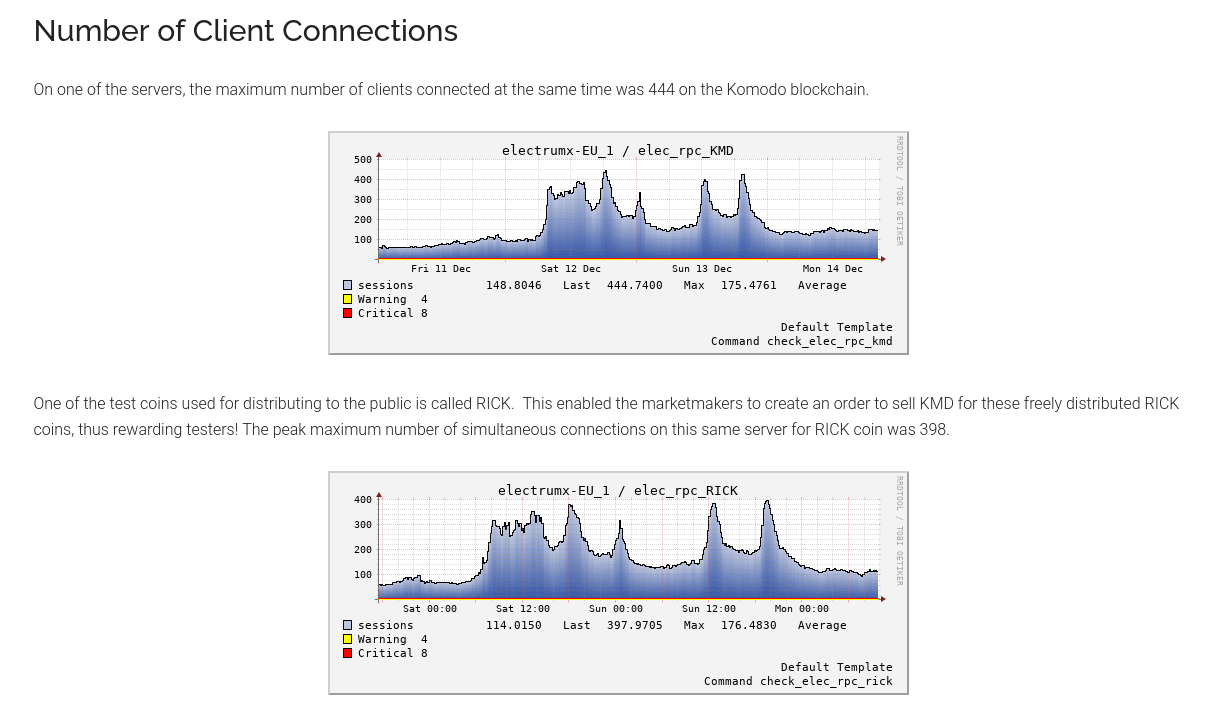

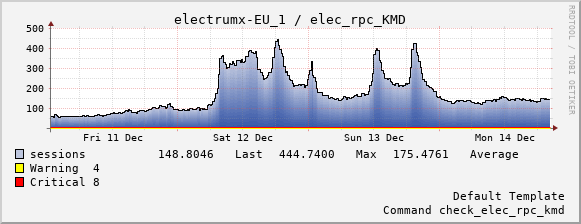

On one of the servers, the maximum number of clients connected at the same time was 444 on the Komodo blockchain.

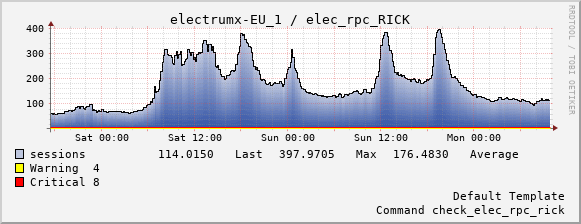

One of the test coins used for distributing to the public is called RICK. This enabled the marketmakers to create an order to sell KMD for these freely distributed RICK coins, thus rewarding testers! The peak maximum number of simultaneous connections on this same server for RICK coin was 398.

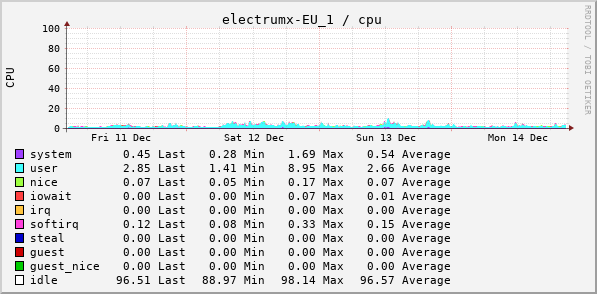

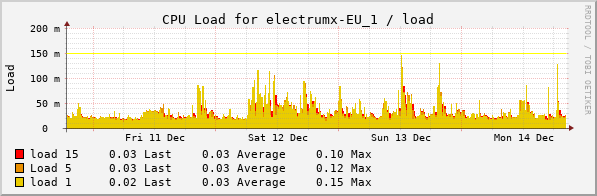

The hardware of each server has 64 threads and 384GB of RAM. They are beasts. The machines ran with minimum load, despite being able to handle approximately 400 concurrent users, sync 5+ blockchains.

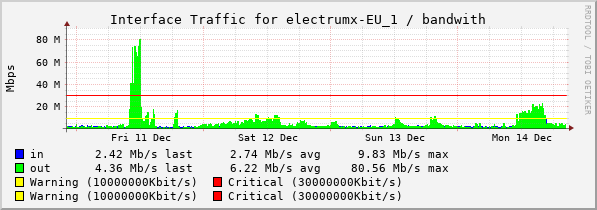

Bandwidth consumption is often more of a metric to be aware of when hosting multiple blockchains, especially when the number of new full node clients connecting want to sync. Rackspace comes with generally 5TB of data allowance. For lite clients, which this electrum server infrastructure is used mainly for. Looks like a constant 3Mb/s in requests and 6Mb/s in responses is required for hosting 500+ clients including syncing full node chains not used in the stress test.

These are ball park stats and no meaningful analysis can be made except the potential to host magnitude more clients is possible with this single server. To host many magnitudes more, simply making geo-DNS queries available to users software and running more servers will work.

Thank you cipi for providing these graphs.